Azure Kubernetes Service — behind the scenes

If you’re using the managed Kubernetes offering from Microsoft also known as AKS you’re probably interested in the following.

AKS control plane

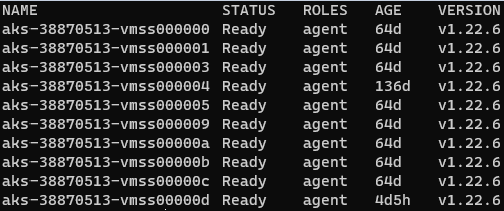

Right off the bat if you do kubectl get nodes you're not going to see any control-plane,master nodes, because the Azure platform manages the AKS control plane, and you only pay for the AKS worker nodes that run your applications.

AKS provides a single-tenant control plane, and its resources reside only in the region where you created the cluster. To limit access to cluster resources, you can configure the role-based access control (RBAC) (which is enabled by default), and optionally integrate your cluster with Azure AD.

AKS worker nodes, virtual machines and node pools

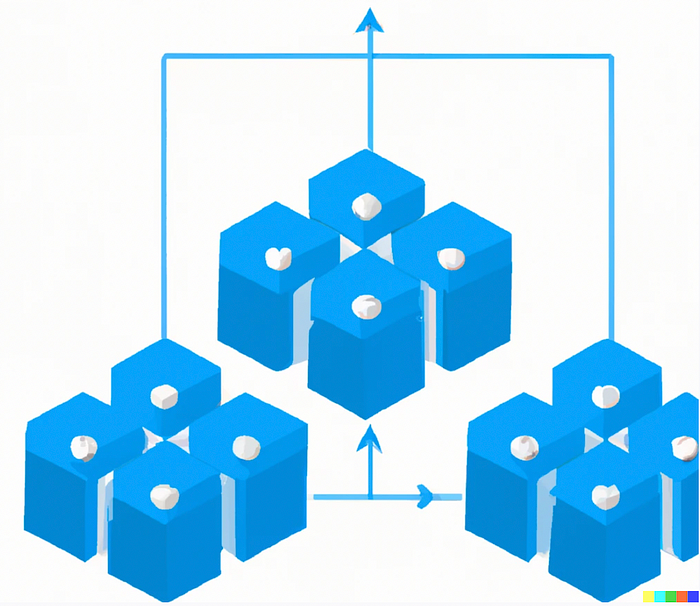

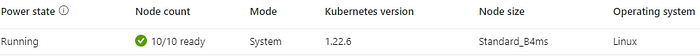

When you create an AKS cluster, Azure sets up the Kubernetes control plane for you and will make one or more VMSS (virtual machine scale sets) as worker nodes, in your subscription. Alternatively, you can pay for a control plane that’s backed by an SLA.

Azure virtual machine scale sets let you create and manage a group of load-balanced virtual machines. The VMSS can only manage virtual machines that are implicitly created based on the same virtual machine configuration model.

According to your workloads, you can choose different virtual machines flavors, some of them are:

- A-series (for entry-level workload best suited for dev/test environments)

- B-series (economical burstable VMs)

- D-series (general purpose compute)

Going further in Azure Kubernetes Service, nodes of the same configuration are grouped together into node pools.

Existing node pools can be scaled, or upgraded, and if needed they can be deleted, but don’t support resizing in place. Meaning vertical scaling (changing the VM “flavor”/size) is not supported and the alternative way to do this is to create a new node pool with a new target and either delete the previous one or just use multiple node pools.

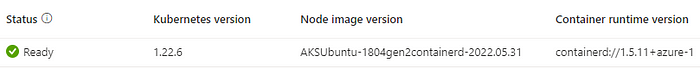

Another interesting piece of information is that in Azure Kubernetes Service containerd has become the default container runtime starting with Kubernetes 1.19.

AKS networking

By default, AKS clusters use kubenet networking option, and a virtual network and subnet are created for you.

The cluster nodes get an IP address from a subnet in a Virtual Network. The pods running on those nodes get an IP address from an overlay network, which uses a different address space from the nodes. Pod-to-pod networking is enabled by NAT (Network Address Translation). The benefit of kubenet is that only nodes consume an IP address from the cluster subnet.

AKS deployment

There’re multiple ways to create an Azure Kubernetes Service, using Azure Portal, Azure CLI, Azure Resource Manager templates, or even Terraform.

To deploy an AKS cluster you must have a resource group to manage the resources consumed by the cluster.

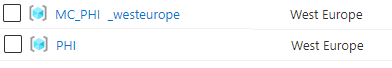

An important aspect is that an AKS deployment spans two resource groups, 1st group contains only the Kubernetes service resources, and the 2nd resource group (node resource group, name starting with MC_) contains all of the infrastructure resources associated with the cluster (k8s nodes VMSS, virtual networking, and storage).

Closing notes

In conclusion, AKS simplifies deploying a managed Kubernetes cluster by offloading the operational tasks to Azure, but you always should be aware of the tradeoffs that come with it. If you liked this article you can also read about 🔎 probing Kubernetes architecture or even 📚 a tale of a Kubernetes upgrade.